AnimeMusicVideos.org > Guide Index

Colorspace

"The real trick to optimizing color space conversions is of

course to not do them." --trbarry, April 2002

When storing video digitally there are two ways in

which you can store it: RGB and YUV. Each has a

variation or two that change how accurate they are, but that's it (i.e.

RGB16,RGB24,RGB32 and then YUV, YUY2, YV12, I420 etc).

RGB stores video rather intuitively. It stores a color

value for each of the 3 color levels, Red Green and Blue, on a per

pixel basis. The most common RGB on computers these days is RGB24 which

gives 8 bits to each color level (that's what gives us the 0-255 range

as 2 to the 8th power is 256), thus white is 255,255,255 and black is

0,0,0.

RGB uses the fact that three color components can be added

together to create any color. In contrast, YUV stores the

color the same way human brain

works.

Now comes an explanation: the primary thing the human brain

acknowledges is

brightness, aka "luma".

Luma can be relatively

easily calculated from RGB channels by averaging the color values and

giving more weight to some colors over others to find out the luma

value.

Scientists came up with weights that match human

perception where green has high contribution, red half of that, blue -

one

third of red. Why this is the case is simply a matter of the way the

brain works and this perceptive model is important in how YUV was

developed.

Luma is a simple positive value where zero means black and high values

mean white.

As for the color information, things are not so easy. They are called U

and V (or sometimes Cb and Cr respectively). They can have both

positive and

negative values which match the way color is processed in our brain.

Cr, when positive, means that the object is red.

Cr negative means that the object is green.

Our

brain understands

these two colors as opposites - If you think about it, no object can

ever be red-greenish.

Cb, when positive, indicates a

blue object. Cb negative means yellow.

Again, they are the opposites for our brain and so we have the

reasoning behind YUV as a color methodology.

So, why is it useful to store pictures in YUV?

There are a couple of reasons for storing in YUV

- a historical reason: when color TV was invented, it

needed to

be both backwards and forwards compatible with black-and-white TV. The

old B&W channel became luminance, while two color channels were

added on top of that. Old TVs simply ignore the two extra channels

while color TVs just understand that chroma is zero in B&W signal.

- you get one channel that is much more

important, and two

channels that are less important (but necessary). You can do tricks

with this idea, as you will see.

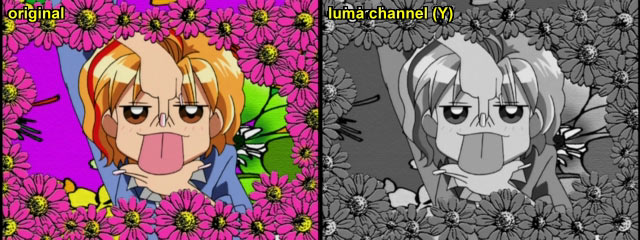

So, when dealing with YUV you can imagine Y as being the black

and white image then U

and V as the "coloring" of the image. Here's a visual example:

You can see straight away that the color information is much less

detailed. This is true, but even if it wasn't the reality is that you

just can't notice detail as much in the chroma channel (remember

biology - rods and cones... you have more rods, you can't actually see

colour as clearly as you can see luma.)

Although you can have one Y, U and V sample per pixel like you

do with R,G and B, it is common for the chroma samples (the U and V) to

be sampled less often because the accuracy of the chroma is less

noticable. There are a many ways to do this but we are going

to demonstrate the two that you will deal with most - YUY2 and YV12.

YUY2 is a type of YUV that samples the luma once every

pixel

but only samples the chroma once every horizontal pair of pixels - the

point being that the human eye doesn't really notice that the chroma of

the two pixels is the same when the luma values are different. It's

just like the way you can be less accurate when coloring in a black and

white picture than if you were making the picture from scratch with

only colored pencils.

So basically YUY2 stores color data at a lower accuracy than

RGB without us really noticing that much. Effectively what happens is

that the chroma information is half the regular vertical resolution.

Due to this nature of YUY2, when you convert between YUY2 and

RGB you either lose some data (as the chroma is averaged), or

assumptions have to be made and data must be guessed at or interpolated

(because the chroma is averaged already we can't find out what the real

value was before).

Even less chroma sampling: YV12

YV12 is much like YUY2 but takes this one step further. Where YUY2

samples chroma once in every 2 pixels in a row, YV12 samples chroma

once in

every 2x2 pixel block! You'd think that only having one chroma sample

in a 2x2 square would look terrible but the fact is that we don't

really

notice the difference all that much. Of course, because there are so

many less chroma samples (it's effectively half the resoltion than the

luma) there is less information to store which is

great for saving bits. All major distribution codecs use a 1 chroma for

4

pixels method - including MPEG2 on DVDs.

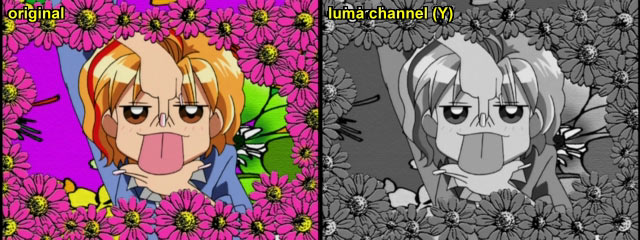

The top image is an original and below it is an image sampled

with YUV 4:2:0 (YV12) sampling , notice how the colors of the hairline

at the top left become puzzled because of the chroma averaging between

pixels.

The sharp among you may think "um ok but what if the image is

interlaced - you'd be sampling color from two different fields!" and

you'd be right... which is why YV12 interlaced footage has to be

sampled a field at a time instead of a frame at a time.

Colorspace Conversions

Converting back and forth between colorspaces is bad because

you can lose detail, as mentioned, and it also slows things down. So,

you want to avoid colorspace conversions as much as possible. But how?

Well, you need to know two things - the colorspace of your

footage and the colorspace used by your programs.

Footage Colorspaces:

DVDs - These use MPEG2 with 4:2:0 YUV (YV12) color.

There is one chroma sample for each square of 2x2 pixels.

DV - This uses 4:1:1 YUV which has the same number

of chroma samples as MPEG2 but in a different order.

Mjpeg - This can use all kinds of YUV sampling but

4:2:0 (YV12) is very common.

MPEG1, 2 and 4 (divx etc) all use YV12 color

(although they can technically support other YUV modes in theory, just

not in practice). There are MPEG2 profiles (such as the Studio Profile)

which can deal with 4:2:2 chroma but mostly you will see 4:2:0 chroma

being used.

HuffYUV - The original versions support YUY2 and RGB, but some modifications of the codec such as that found in FFDShow can support YV12.

Premiere, and almost all video editing programs work

in RGB because it's easier to deal with mathematically. Premiere

demands that all incoming video should be in RGB32 - or 24-bit color

with 8-bit alpha channel, specifically, and will convert the YUV

footage you give it to that format for processing. Even Premiere Pro

which hailed itself as being able to support YUV formats can only

support 4:4:4 uncompressed YUV which is hardly any different from RGB.

The native DV support is useful but it still doesn't warrant all the

hype as very few of the plugins (including Adobe's own sample code)

actually use the YUV support at all.

Avisynth, one of the primary video processing tools which we will be using,

is capable of supporting RGB, YUY2, and YV12 video (however many of its plugins may only support YV12).

This is just fine most of the time, although on occasion you might want to use a filter that requires a colorspace conversion.

The optimal scenario involves only 2 colorspace

conversions: MPEG2 from DVD in YV12, processed with Avisynth in YV12

and then converted to RGB32 ready for

editing. RGB export from editing program in RGB and

then convert to YV12 ready for the final video compressor. By doing this you not

only save time but also quality by avoiding colorspace conversions.

Thankfully, with a little knowledge of these colorspaces, you

can avoid doing conversions, or at least only do it when you really need to.

ErMaC, AbsoluteDestiny,

Syskin and Zarxrax -

August 2008

|